If I tell you that I like my coffee with lots of milk but no sugar, you understand this immediately and no repetition of my preference is required. Similarly, if I instruct my banking app to transfer $700 from my savings account to my checking account, the order is executed correctly and promptly (with the usual confirmation prompt to safeguard against my possible error, not that of the app). So why doesn’t Sense learn instantaneously when shown an example of your garage-door opener in operation?

Machine Learning vs. Human Learning

To understand the answer to this question, one must consider the variability inherent in the various situations. Human learning, on the one hand, is exposed to a tremendous range of variability, and we somehow meta-learn to distinguish between extraneous variability and meaningful content – how we achieve this is one of the major mysteries of modern science.

For applications such as electronic banking, we reduce the variability by careful design so that their input information is completely unambiguous – making instantaneous updates possible.

State-of-the-art machine learning applications – including computer vision, speech recognition or device recognition by the Sense monitor – are between those two extremes: we unfortunately cannot (yet?) match the contextual learning abilities of humans, but are forced to deal with more variability than typical database-driven applications (e.g. e-banking).

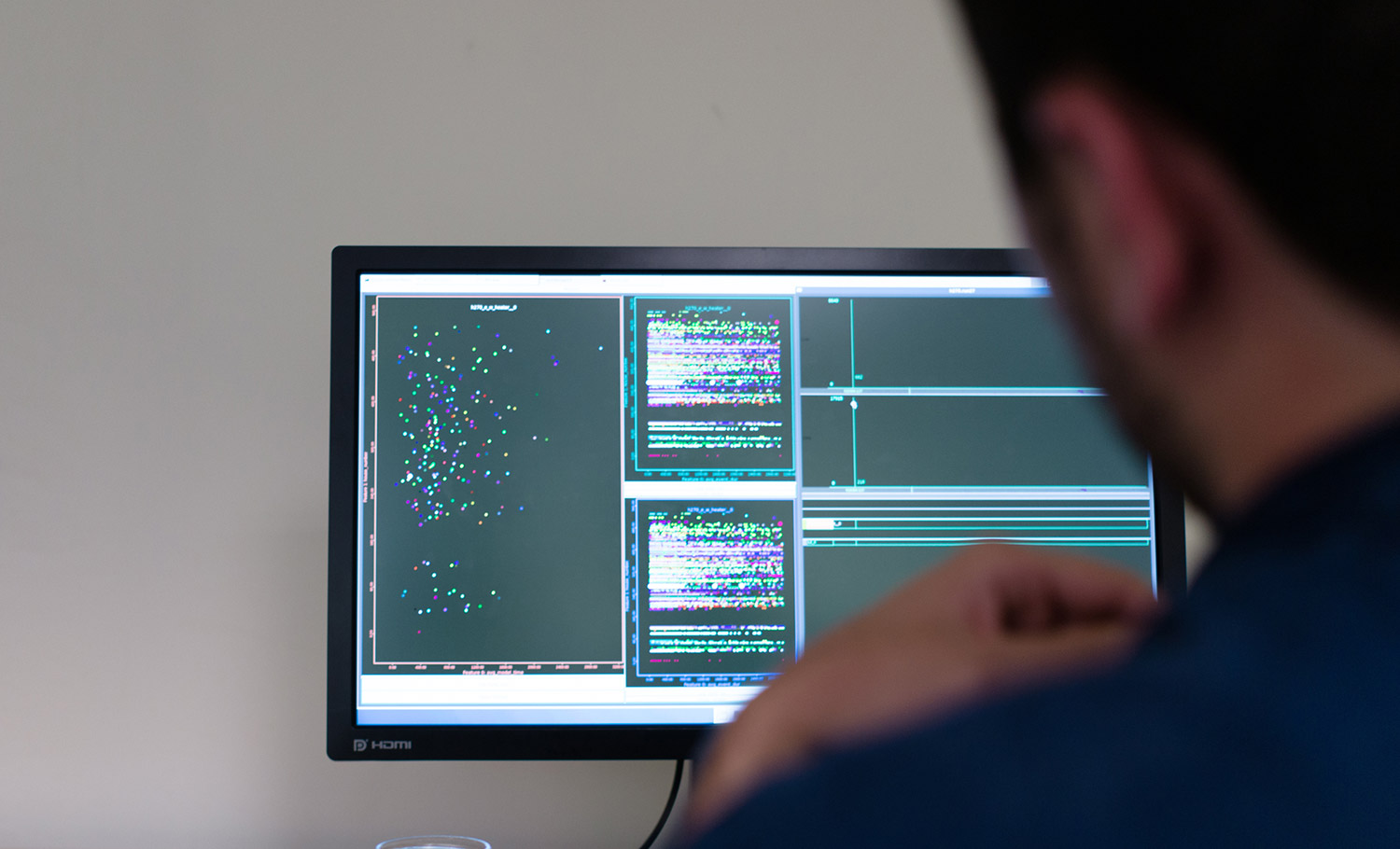

In machine learning we usually deal with variability by collecting several examples of the targeted object (garage-door opener), and of competing objects that we do not want to confuse with the target (all the other appliances in your house).

All these examples are processed by an algorithm such as Deep Learning or Hidden Markov Modeling to reliably recognize the object of interest. But this reliability depends on the samples being sufficiently representative of both the target class and all the other, potentially confusable, objects. Therefore, “teaching” the system with just a few examples of the targeted device is not feasible in our current paradigm.

Note that these examples have rather similar wattage traces (shapes) and durations, but that there is some variability between the different examples. The baseline values before and after every event are also not the same, showing that different sets of other devices were on in each of these instances.

This is why Sense needs to see many repetitions of your device working in its typical context, to be sure our models account for all potential variations. The Sense integrations with smart plugs from Wemo and TP-Link have been critical in helping to expedite this process, while providing immediate detection of the devices they’re plugged into. User feedback is also critically important to the training of our algorithms in the categorization and naming of so-called “Mystery Devices” that appear in the Sense Home app as something like “Heat 1” or “Motor 3”. Adding categories, names, and make/model information helps to connect the recognition of these contextual models to a collection of user-submitted descriptors, so that more and more devices can be discovered automatically and accurately.